Optimize price-performance of LLM inference on NVIDIA GPUs using the Amazon SageMaker integration with NVIDIA NIM Microservices

AWS Machine Learning Blog

MARCH 18, 2024

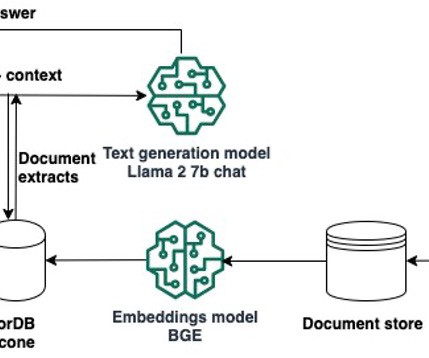

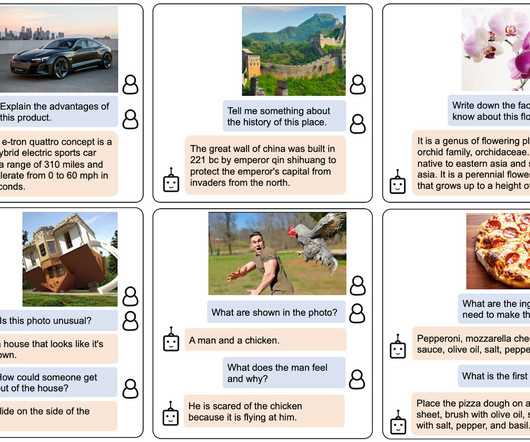

NVIDIA NIM m icroservices now integrate with Amazon SageMaker , allowing you to deploy industry-leading large language models (LLMs) and optimize model performance and cost. In this post, we provide a high-level introduction to NIM and show how you can use it with SageMaker.

Let's personalize your content