The Full Story of Large Language Models and RLHF

AssemblyAI

MAY 3, 2023

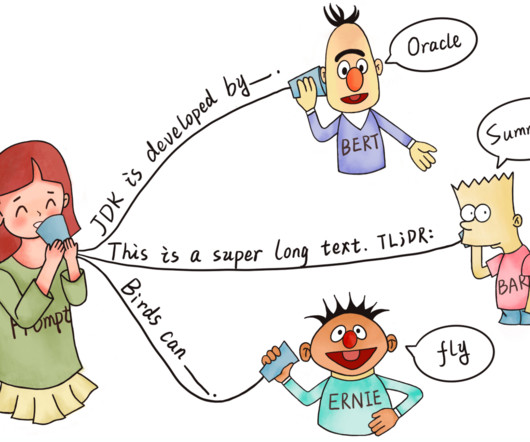

We are going to explore these and other essential questions from the ground up , without assuming prior technical knowledge in AI and machine learning. During the training process, an LM is fed with a large corpus (dataset) of text and tasked with predicting the next word in a sentence.

Let's personalize your content