Understanding BERT

Mlearning.ai

MARCH 2, 2023

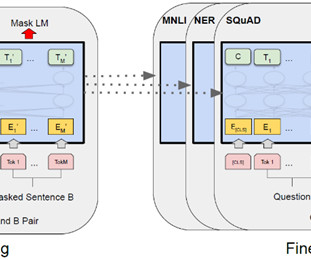

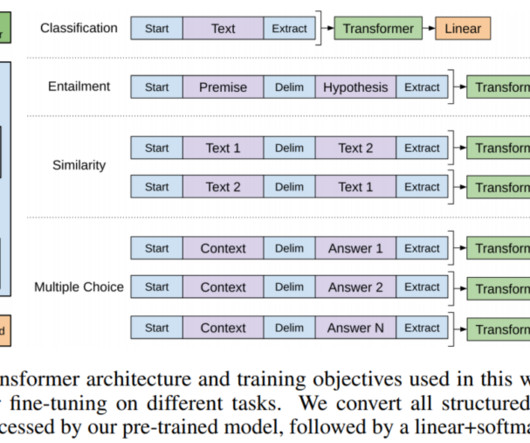

Pre-training of Deep Bidirectional Transformers for Language Understanding BERT is a language model that can be fine-tuned for various NLP tasks and at the time of publication achieved several state-of-the-art results. Preliminaries: Transformers and Unsupervised Transfer Learning II.1 1 Transformers and Attention II.2

Let's personalize your content