Reduce Amazon SageMaker inference cost with AWS Graviton

AWS Machine Learning Blog

MAY 10, 2023

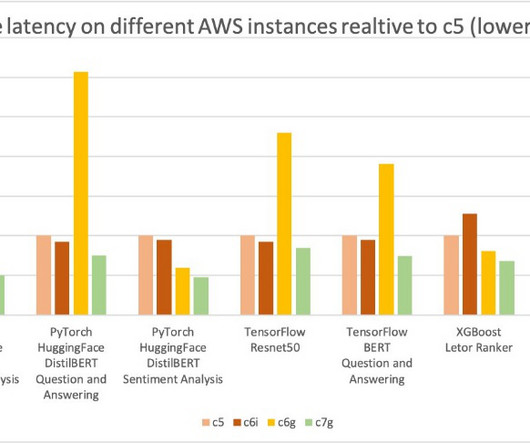

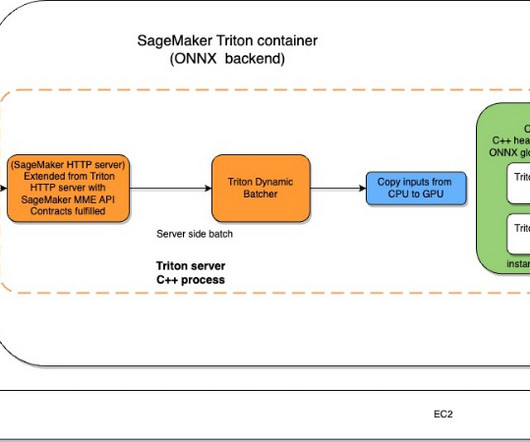

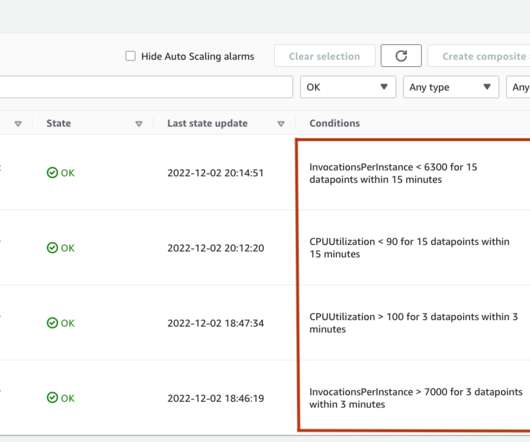

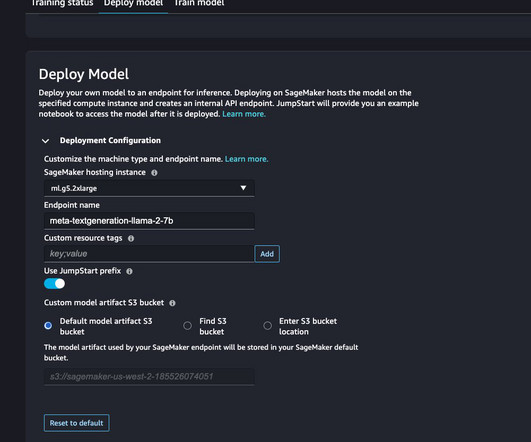

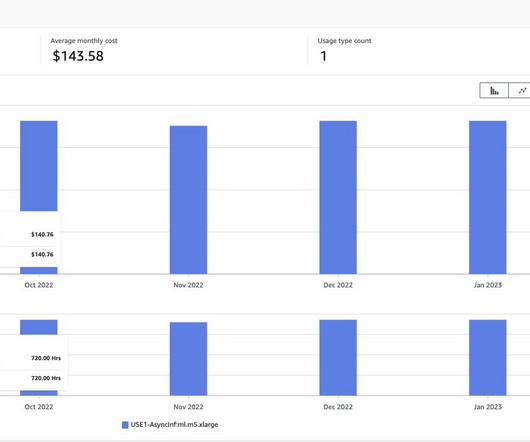

SageMaker provides multiple inference options so you can pick the option that best suits your workload. New generations of CPUs offer a significant performance improvement in ML inference due to specialized built-in instructions. At the same time, the latency of inference is also reduced. 4xlarge c6g.4xlarge 4xlarge c6i.4xlarge

Let's personalize your content