AMD’s Strategic Play: Acquisition of Nod.ai to Challenge Nvidia’s Dominance

Unite.AI

OCTOBER 11, 2023

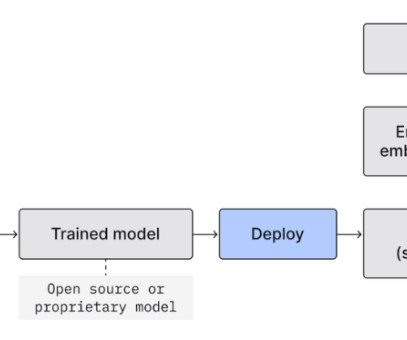

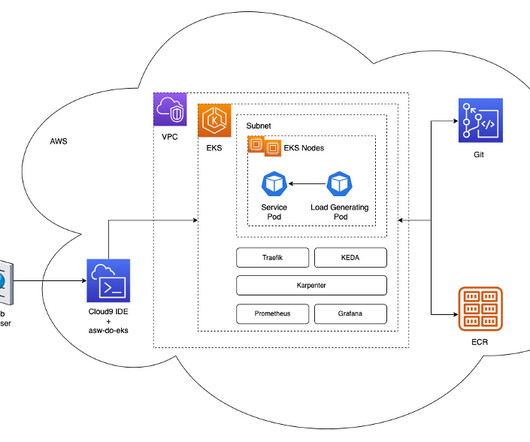

He emphasized that Nod.ai's technologies are not just innovative but have already found extensive deployment across cloud platforms, edge computing, and various endpoints. Nvidia's products, while advanced, come with a hefty price tag. This not only highlights the immediate value that Nod.ai

Let's personalize your content