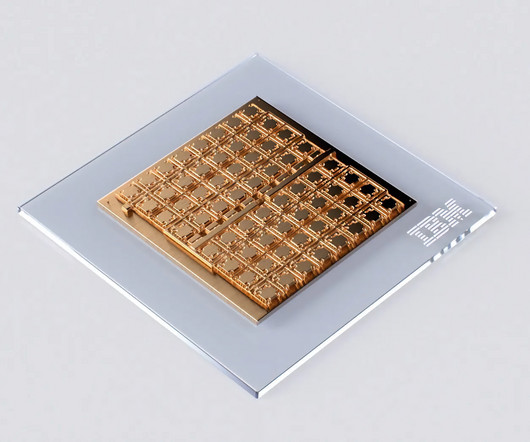

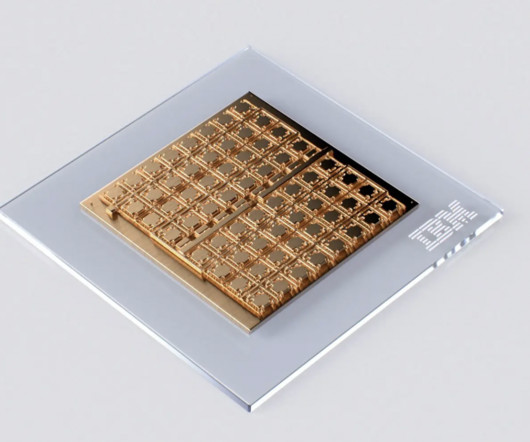

IBM Research unveils breakthrough analog AI chip for efficient deep learning

AI News

AUGUST 11, 2023

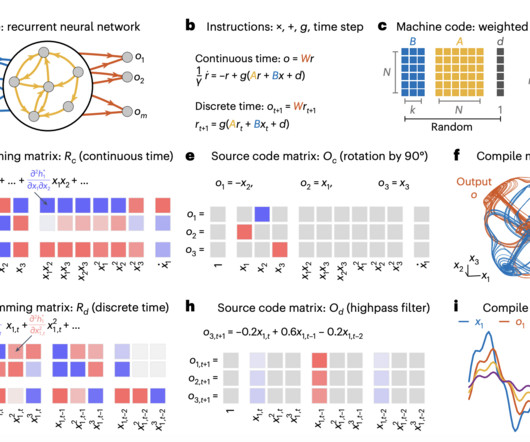

IBM Research has unveiled a groundbreaking analog AI chip that demonstrates remarkable efficiency and accuracy in performing complex computations for deep neural networks (DNNs). To tackle these challenges, IBM Research has harnessed the principles of analog AI, which emulates the way neural networks function in biological brains.

Let's personalize your content