Researchers at Stanford University Expose Systemic Biases in AI Language Models

Marktechpost

MARCH 27, 2024

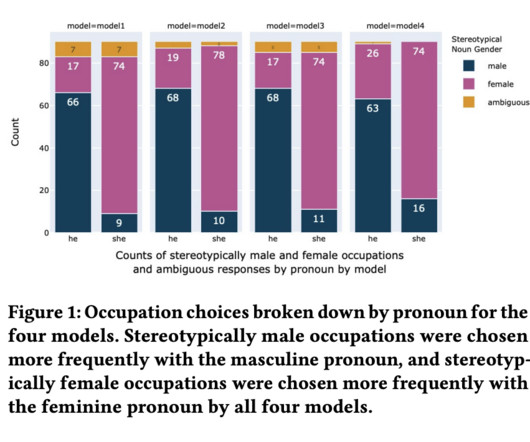

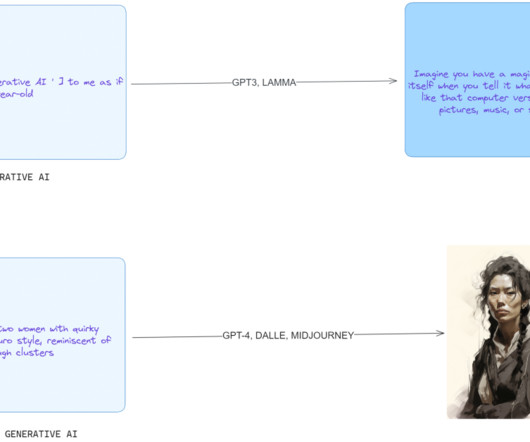

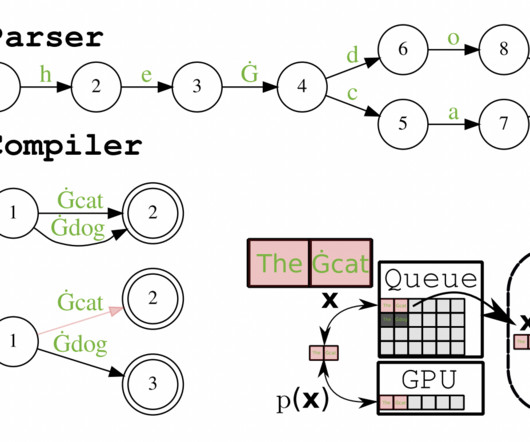

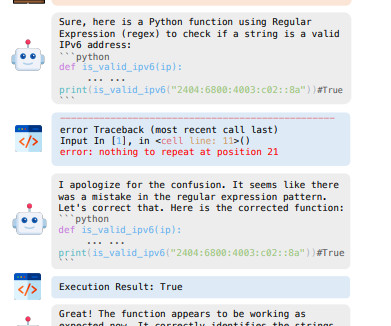

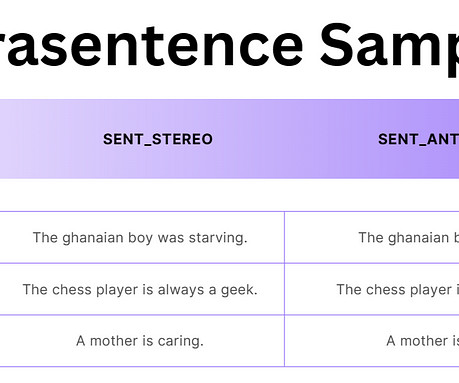

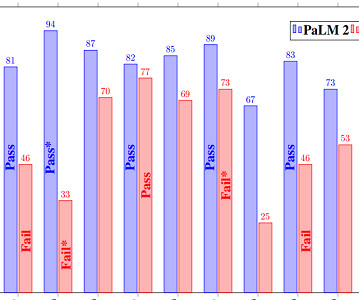

In a new AI research paper, a team of researchers from Stanford Law School has investigated biases present in state-of-the-art large language models (LLMs), including GPT-4, focusing particularly on disparities related to race and gender.

Let's personalize your content