NLP Rise with Transformer Models | A Comprehensive Analysis of T5, BERT, and GPT

Unite.AI

NOVEMBER 8, 2023

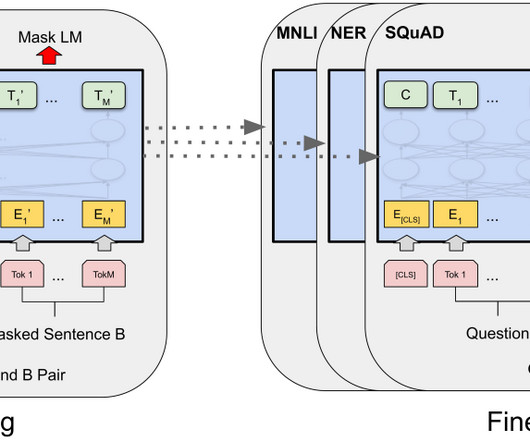

Natural Language Processing (NLP) has experienced some of the most impactful breakthroughs in recent years, primarily due to the the transformer architecture. The major downside of one-hot encoding is that it treats each word as an isolated entity, with no relation to other words.

Let's personalize your content