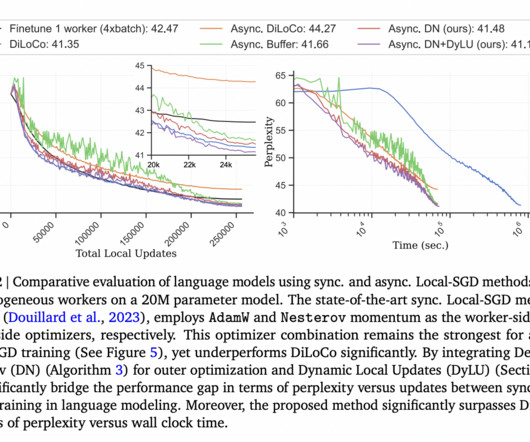

This Machine Learning Paper from DeepMind Presents a Thorough Examination of Asynchronous Local-SGD in Language Modeling

Marktechpost

JANUARY 23, 2024

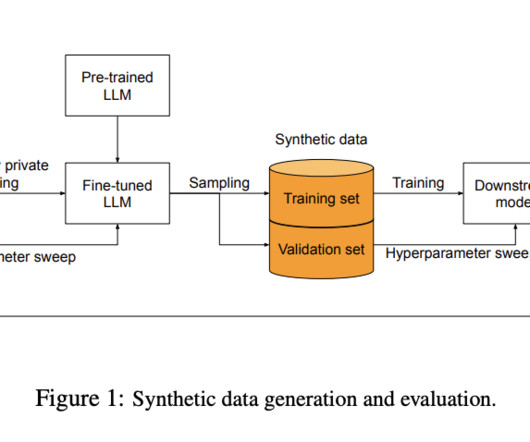

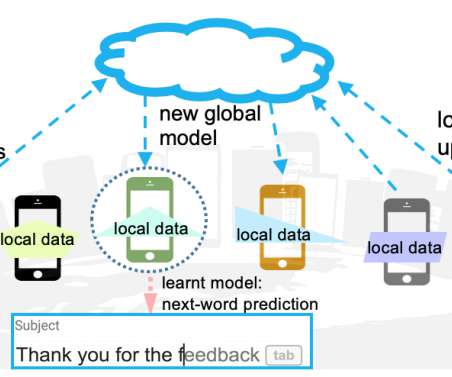

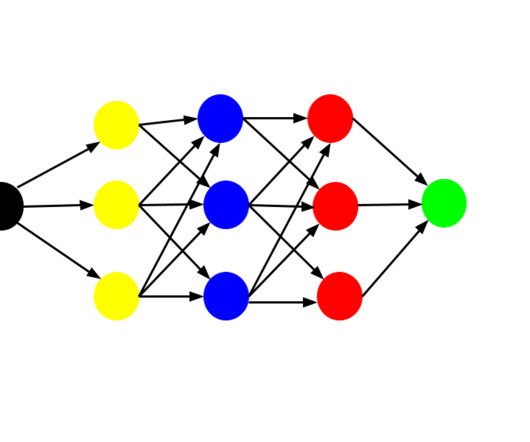

Traditionally, Local Stochastic Gradient Descent (Local-SGD), known as federated averaging, is used in distributed optimization for language modeling. This method involves each device performing several local gradient steps before synchronizing their parameter updates to reduce communication frequency.

Let's personalize your content