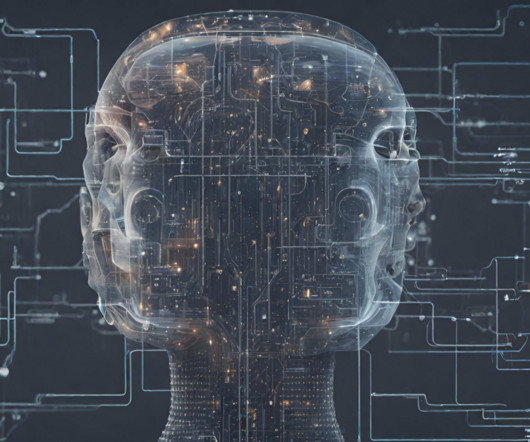

Introduction to Saliency Map in an Image with TensorFlow 2.x API

Analytics Vidhya

JUNE 8, 2022

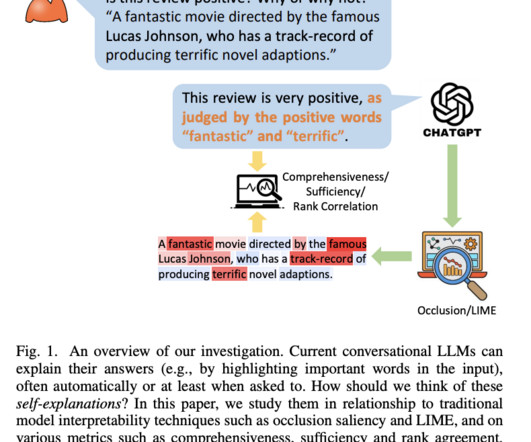

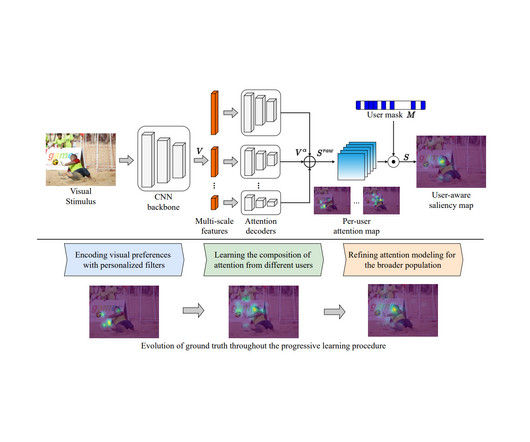

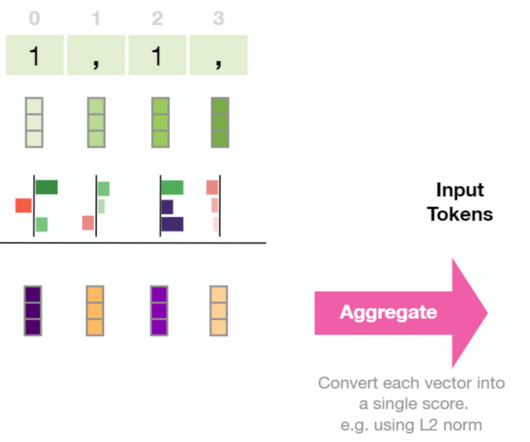

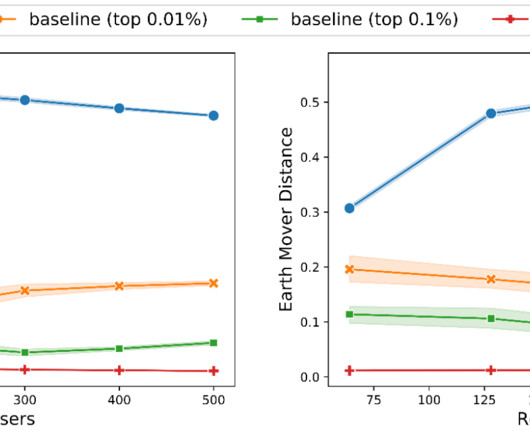

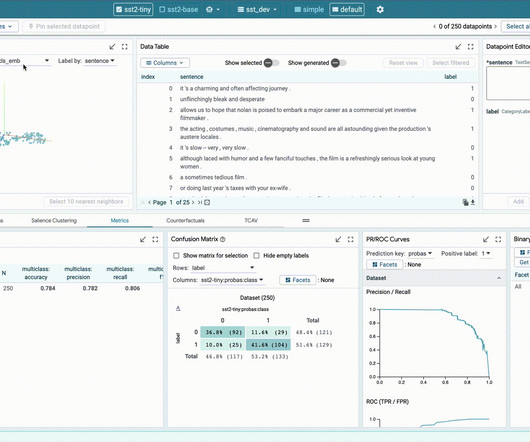

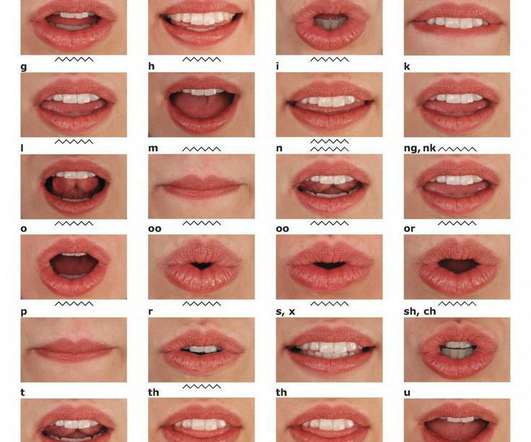

x In the field of computer vision, a saliency map of an image in the region on which a human’s sight focuses initially. The main goal of a saliency map is to highlight the importance of a particular pixel to […]. The post Introduction to Saliency Map in an Image with TensorFlow 2.x Introduction to Tensorflow 2.x

Let's personalize your content