This AI Paper Explores the Impact of Model Compression on Subgroup Robustness in BERT Language Models

Marktechpost

MARCH 28, 2024

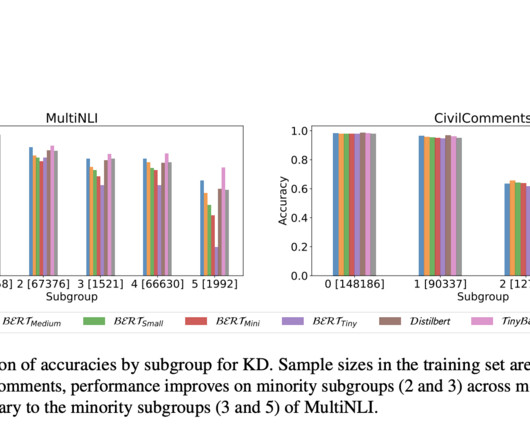

The study uses MultiNLI, CivilComments, and SCOTUS datasets to explore 18 different compression methods, including knowledge distillation, pruning, quantization, and vocabulary transfer. A research team from the University of Sussex, BCAM Severo Ochoa Strategic Lab on Trustworthy Machine Learning, Monash University, and expert.ai

Let's personalize your content