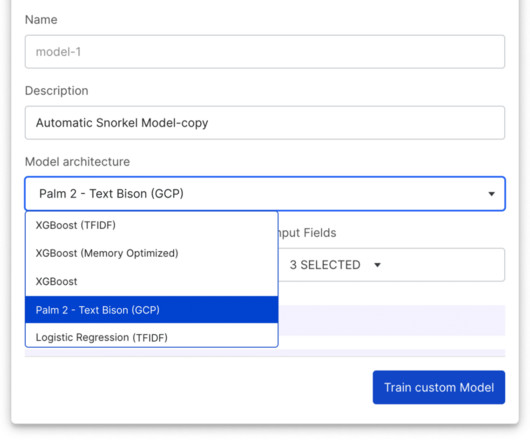

Crossing the demo-to-production chasm with Snorkel Custom

Snorkel AI

APRIL 11, 2024

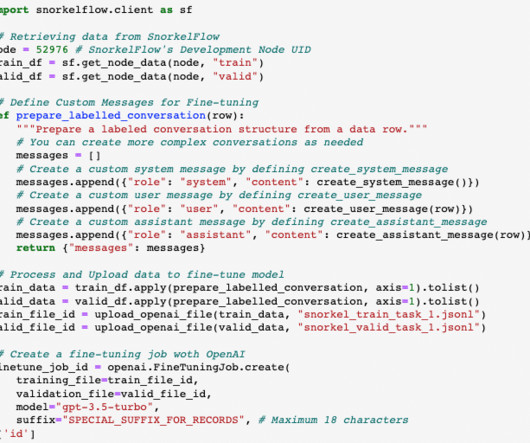

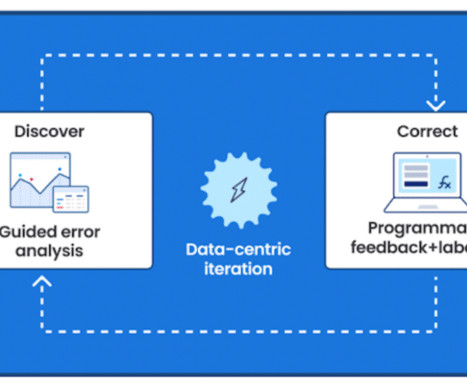

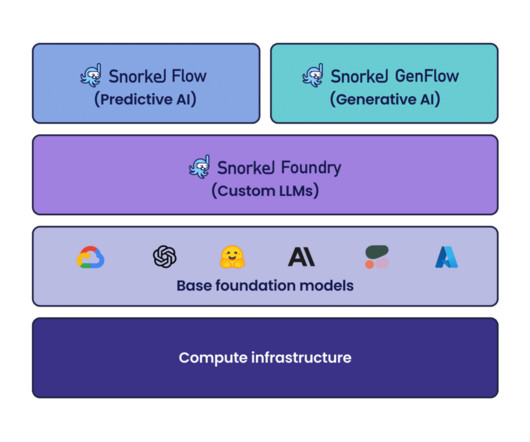

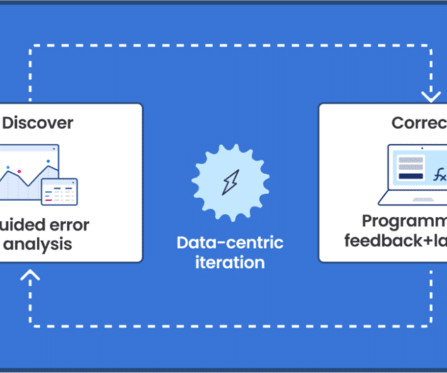

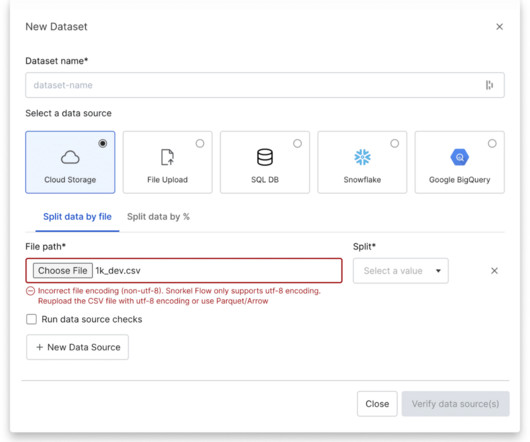

Today, I’m incredibly excited to announce our new offering, Snorkel Custom, to help enterprises cross the chasm from flashy chatbot demos to real production AI value. The Snorkel team has spent the last decade pioneering the practice of AI data development and making it programmatic like software development.

Let's personalize your content