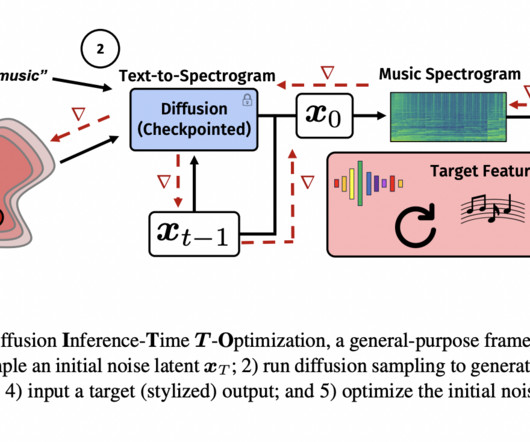

This AI Paper from Adobe and UCSD Presents DITTO: A General-Purpose AI Framework for Controlling Pre-Trained Text-to-Music Diffusion Models at Inference-Time via Optimizing Initial Noise Latents

Marktechpost

JANUARY 26, 2024

DITTO optimizes initial noise latents at inference time to produce specific, stylized outputs and employs gradient checkpointing for memory efficiency. Researchers focused on enhancing DITTO’s capabilities using a rich dataset comprising 1800 hours of licensed instrumental music with genre, mood, and tempo tags for training.

Let's personalize your content