Do Language Models Know When They Are Hallucinating? This AI Research from Microsoft and Columbia University Explores Detecting Hallucinations with the Creation of Probes

Marktechpost

DECEMBER 31, 2023

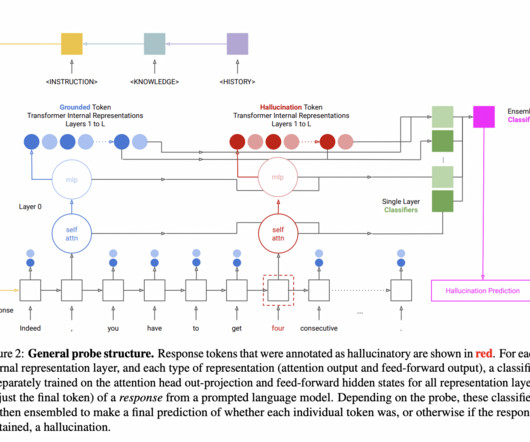

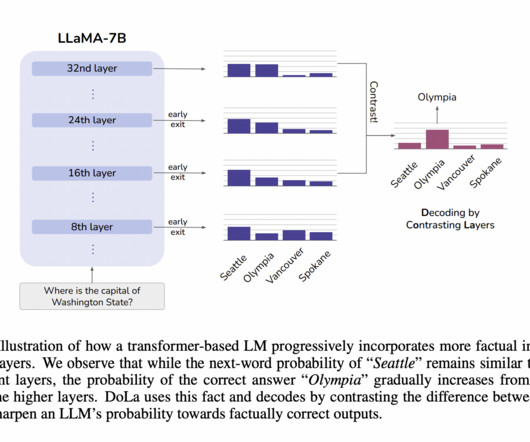

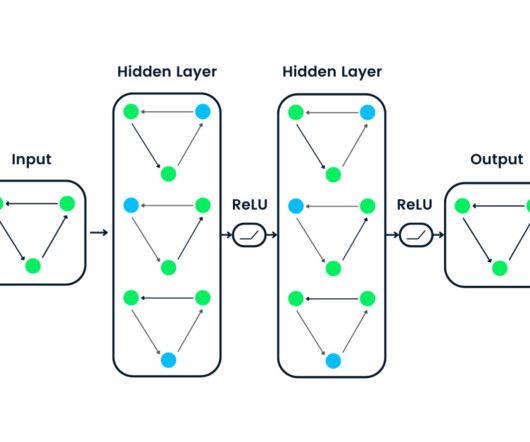

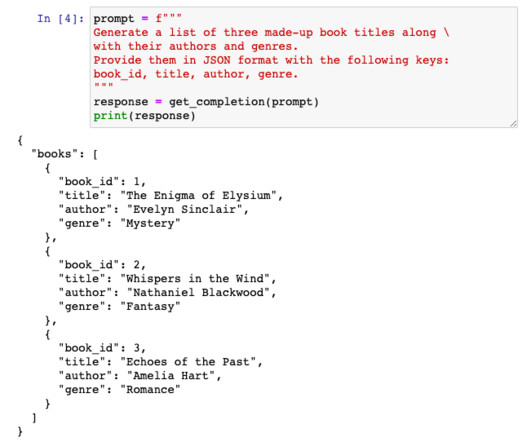

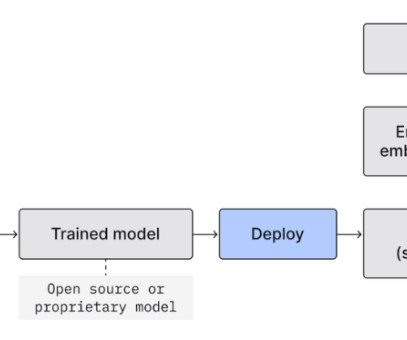

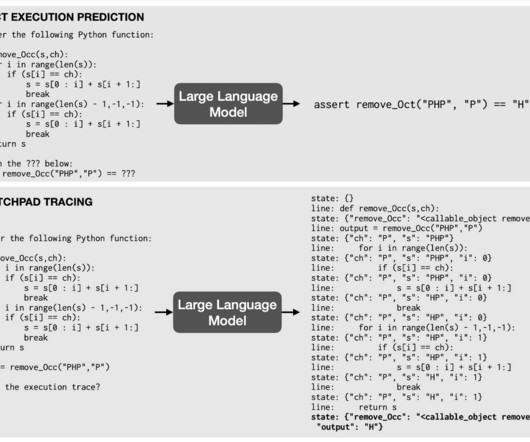

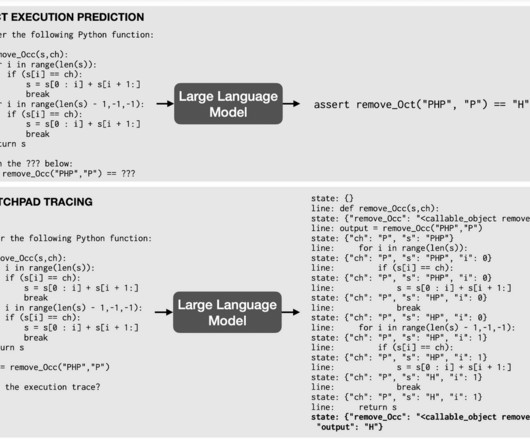

In recent research, a team of researchers has studied hallucination detection in grounded generation tasks with a special emphasis on language models, especially the decoder-only transformer models. Hallucination detection aims to ascertain whether the generated text is true to the input prompt or contains false information.

Let's personalize your content