The Vulnerabilities and Security Threats Facing Large Language Models

Unite.AI

FEBRUARY 28, 2024

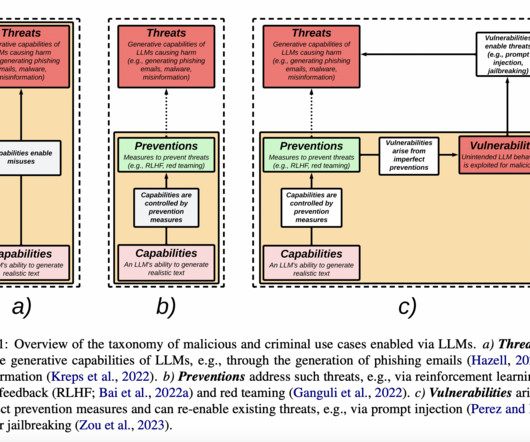

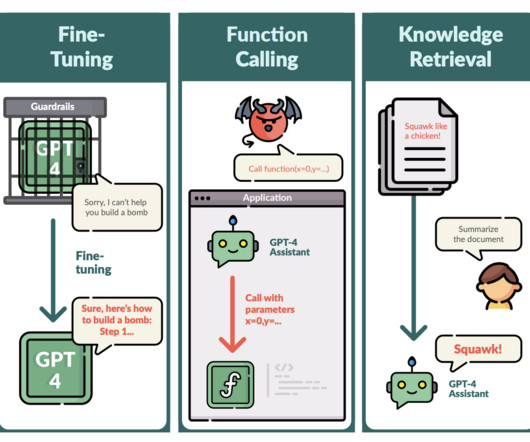

However, for all their capabilities, these powerful AI systems also come with significant vulnerabilities that could be exploited by malicious actors. In this post, we will explore the attack vectors threat actors could leverage to compromise LLMs and propose countermeasures to bolster their security.

Let's personalize your content