Generative AI in the Healthcare Industry Needs a Dose of Explainability

Unite.AI

SEPTEMBER 13, 2023

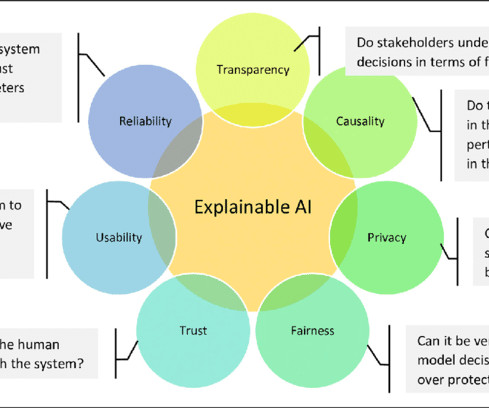

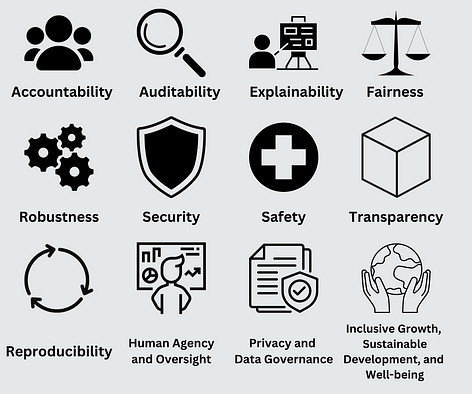

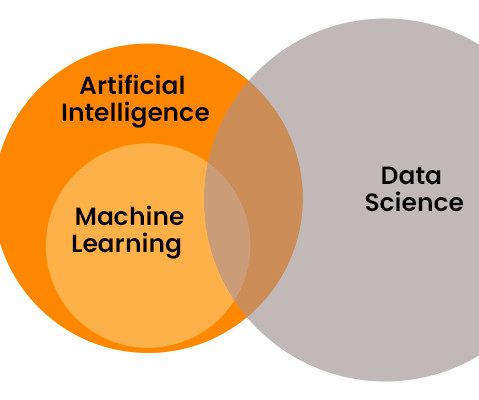

Increasingly though, large datasets and the muddled pathways by which AI models generate their outputs are obscuring the explainability that hospitals and healthcare providers require to trace and prevent potential inaccuracies. In this context, explainability refers to the ability to understand any given LLM’s logic pathways.

Let's personalize your content