Neural Network Diffusion: Generating High-Performing Neural Network Parameters

Marktechpost

FEBRUARY 28, 2024

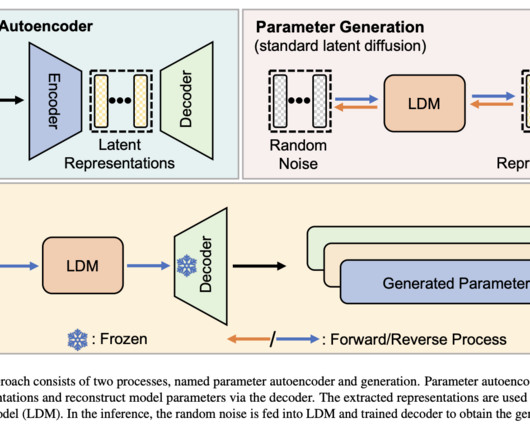

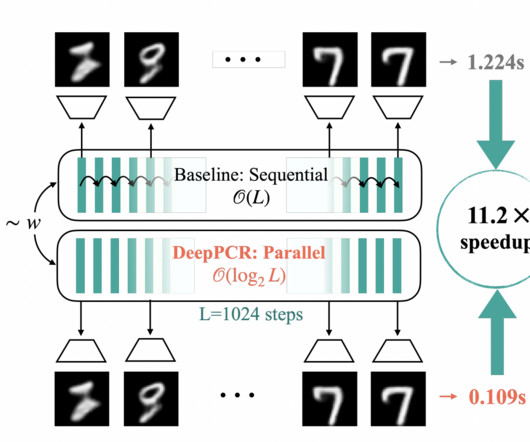

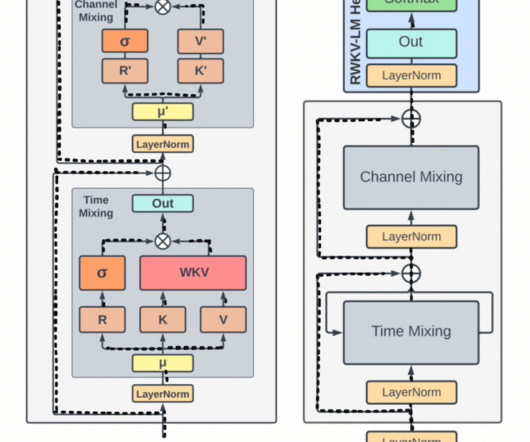

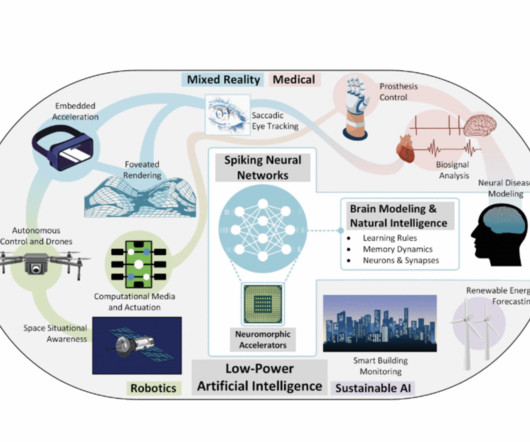

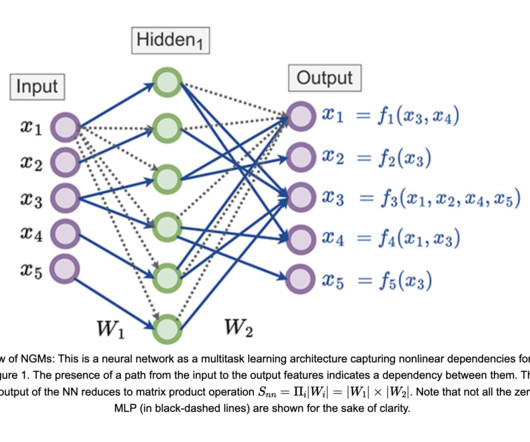

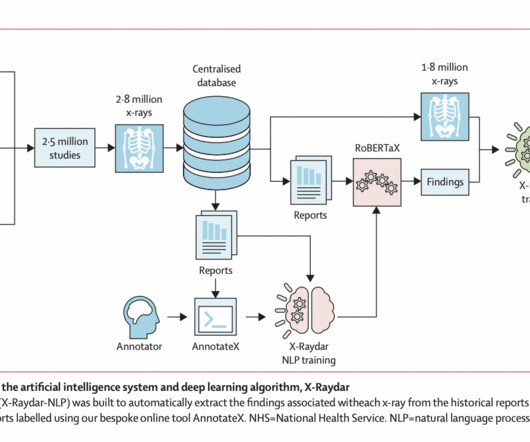

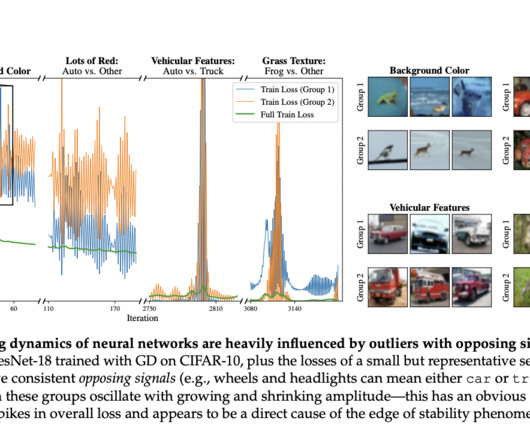

Parameter generation, distinct from visual generation, aims to create neural network parameters for task performance. Researchers from the National University of Singapore, University of California, Berkeley, and Meta AI Research have proposed neural network diffusion , a novel approach to parameter generation.

Let's personalize your content