Who Is Responsible If Healthcare AI Fails?

Unite.AI

JUNE 26, 2023

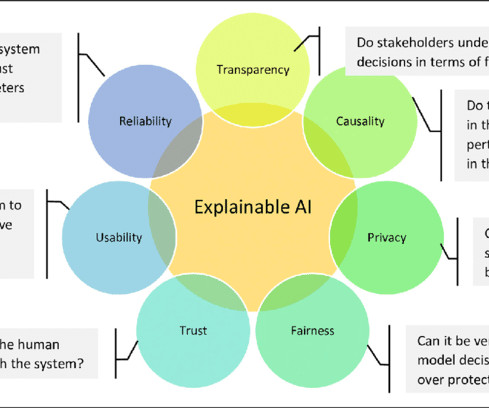

Similarly, what if a drug diagnosis algorithm recommends the wrong medication for a patient and they suffer a negative side effect? At the root of AI mistakes like these is the nature of AI models themselves. Most AI today use “black box” logic, meaning no one can see how the algorithm makes decisions.

Let's personalize your content