Host the Whisper Model on Amazon SageMaker: exploring inference options

AWS Machine Learning Blog

JANUARY 16, 2024

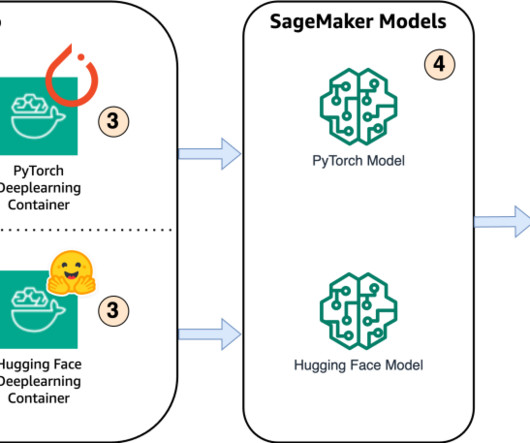

When saving model artifacts in the local repository, the first step is to save the model’s learnable parameters, such as model weights and biases of each layer in the neural network, as a ‘pt’ file. The tokenizer and preprocessor also need to be saved separately to ensure the Hugging Face model works properly.

Let's personalize your content