Modular functions design for Advanced Driver Assistance Systems (ADAS) on AWS

AWS Machine Learning Blog

FEBRUARY 23, 2023

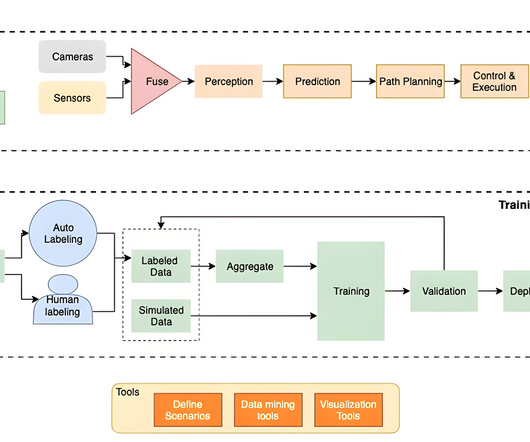

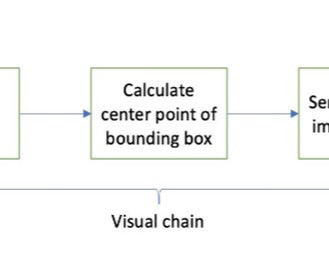

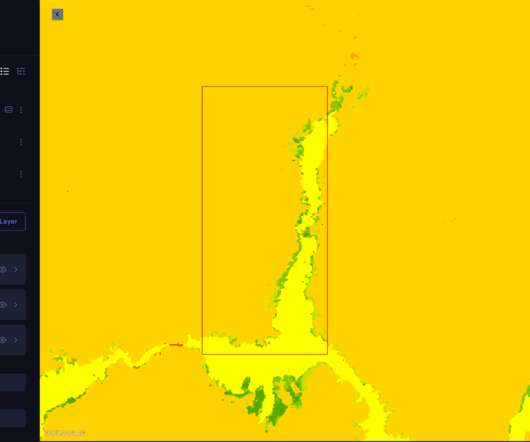

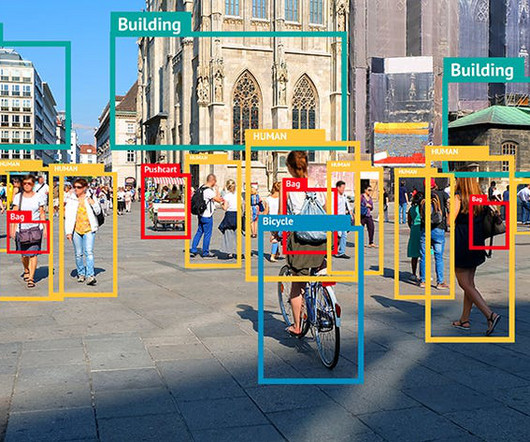

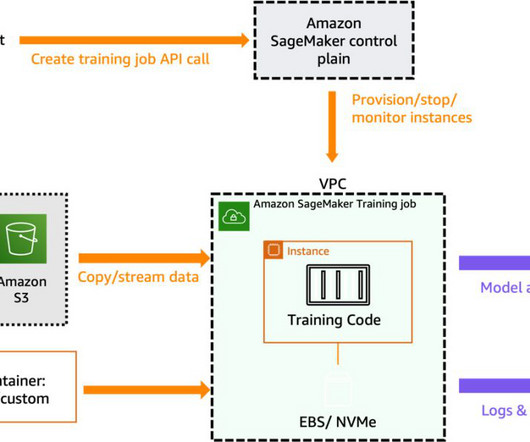

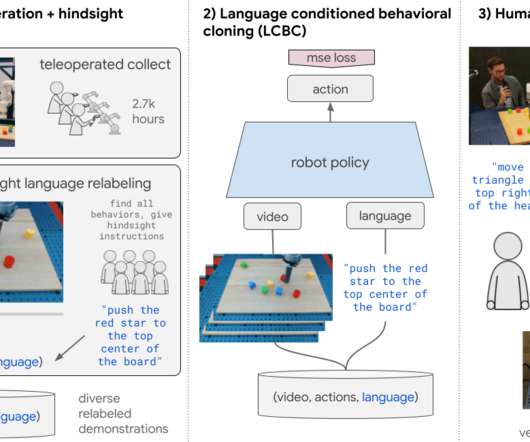

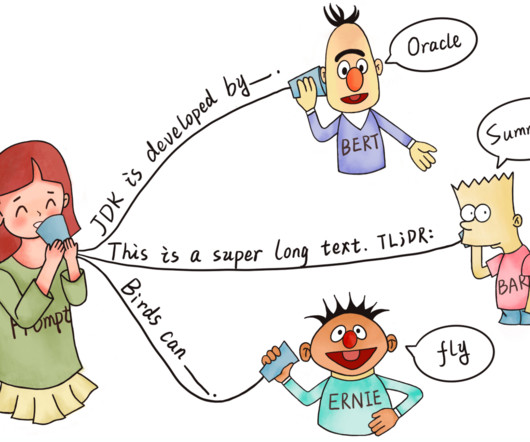

Modular training – With a modular pipeline design, the system is split into individual functional units (for example, perception, localization, prediction, and planning). Depending on the type of ADAS system, you will see a combination of the following devices: Cameras – Visual devices conceptually similar to human perception.

Let's personalize your content