Igor Jablokov, Pryon: Building a responsible AI future

AI News

APRIL 25, 2024

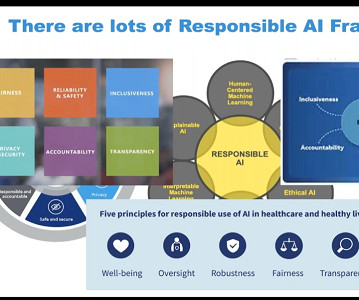

The current incarnation of Pryon has aimed to confront AI’s ethical quandaries through responsible design focused on critical infrastructure and high-stakes use cases. “[We We wanted to] create something purposely hardened for more critical infrastructure, essential workers, and more serious pursuits,” Jablokov explained.

Let's personalize your content