New Neural Model Enables AI-to-AI Linguistic Communication

Unite.AI

MARCH 24, 2024

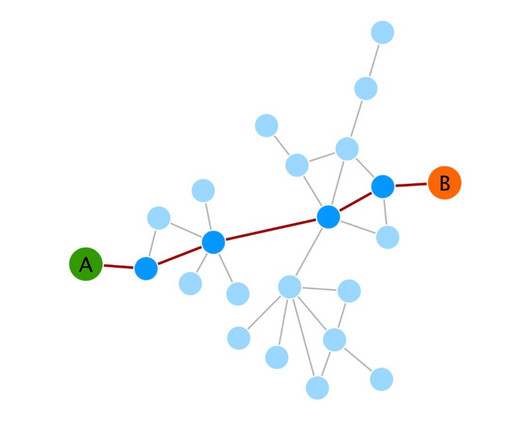

In a significant leap forward for artificial intelligence (AI), a team from the University of Geneva (UNIGE) has successfully developed a model that emulates a uniquely human trait: performing tasks based on verbal or written instructions and subsequently communicating them to others.

Let's personalize your content