Saurabh Vij, CEO & Co-Founder of MonsterAPI – Interview Series

Unite.AI

MAY 28, 2024

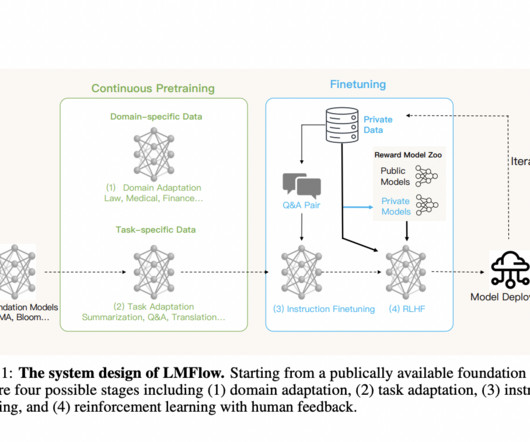

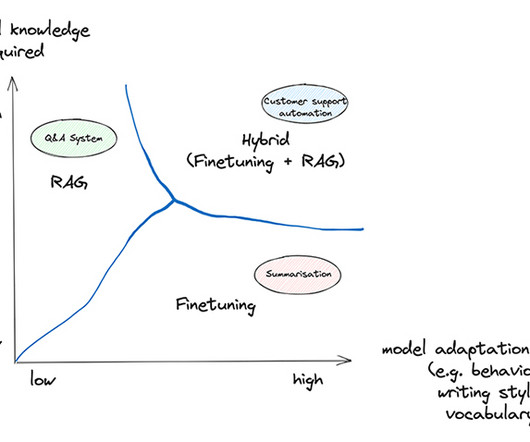

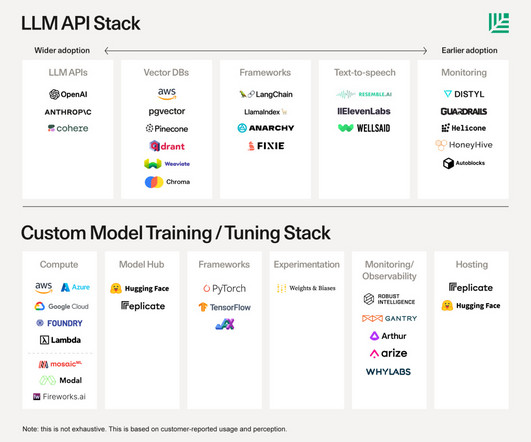

MonsterAPI leverages lower cost commodity GPUs from crypto mining farms to smaller idle data centres to provide scalable, affordable GPU infrastructure for machine learning, allowing developers to access, fine-tune, and deploy AI models at significantly reduced costs without writing a single line of code.

Let's personalize your content