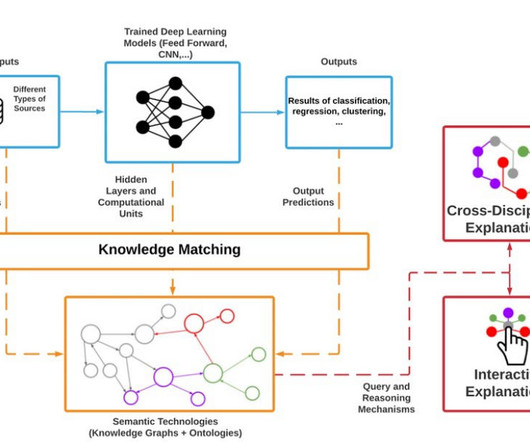

Explainable AI: Demystifying the Black Box Models

Analytics Vidhya

OCTOBER 25, 2023

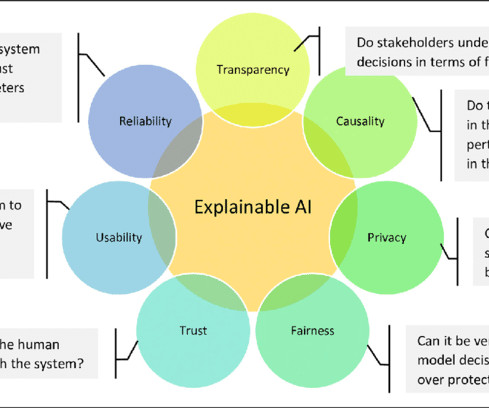

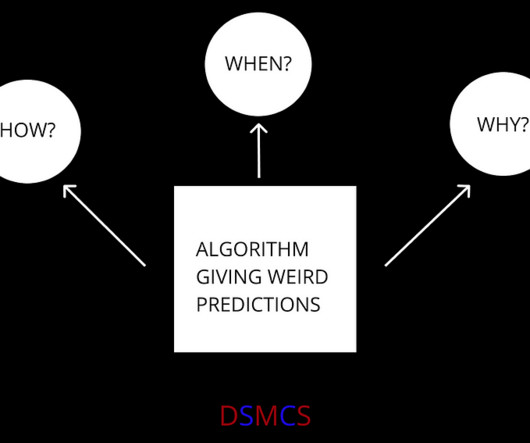

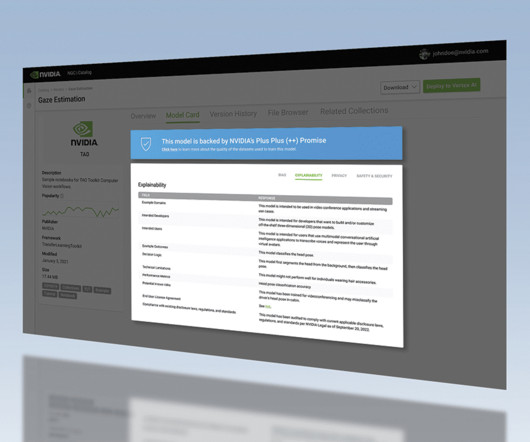

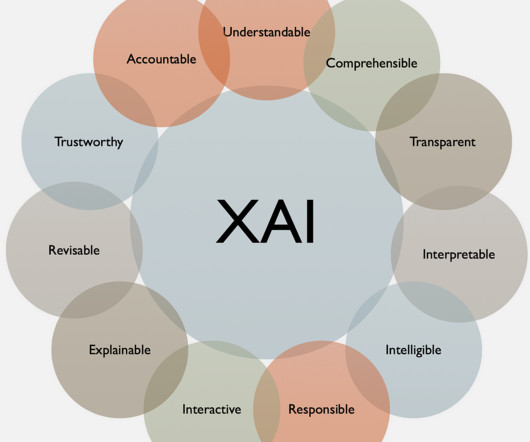

Explainable AI aims to make machine learning models more transparent to clients, patients, or loan applicants, helping build trust and social acceptance of these systems. Now, different models require different explanation methods, depending on the audience.

Let's personalize your content