Researchers Study Tensor Networks for Interpretable and Efficient Quantum-Inspired Machine Learning

Marktechpost

DECEMBER 1, 2023

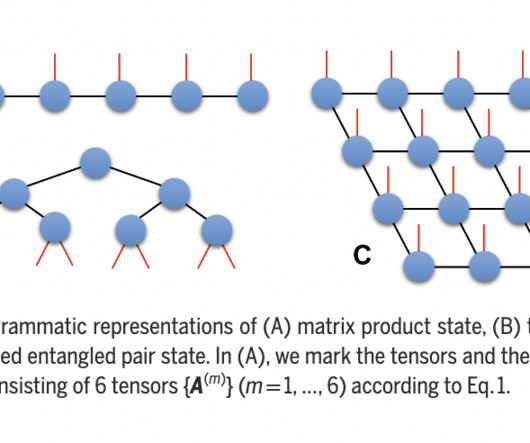

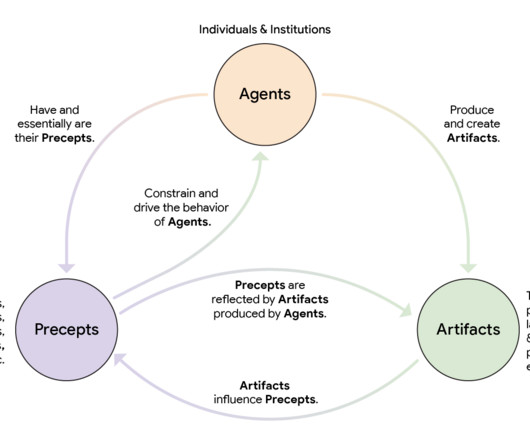

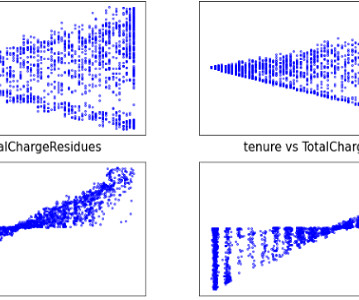

The benefits of TNs for ML with a quantum twist may be summed up in two main areas: the interpretability of quantum theories and the efficiency of quantum procedures. The benefits of TNs for ML with a quantum twist may be summed up in two main areas: the interpretability of quantum theories and the efficiency of quantum procedures.

Let's personalize your content